Microsoft Fabric Analytics Engineer Associate (DP-600) Certification - My Experience & Tips

TLDR

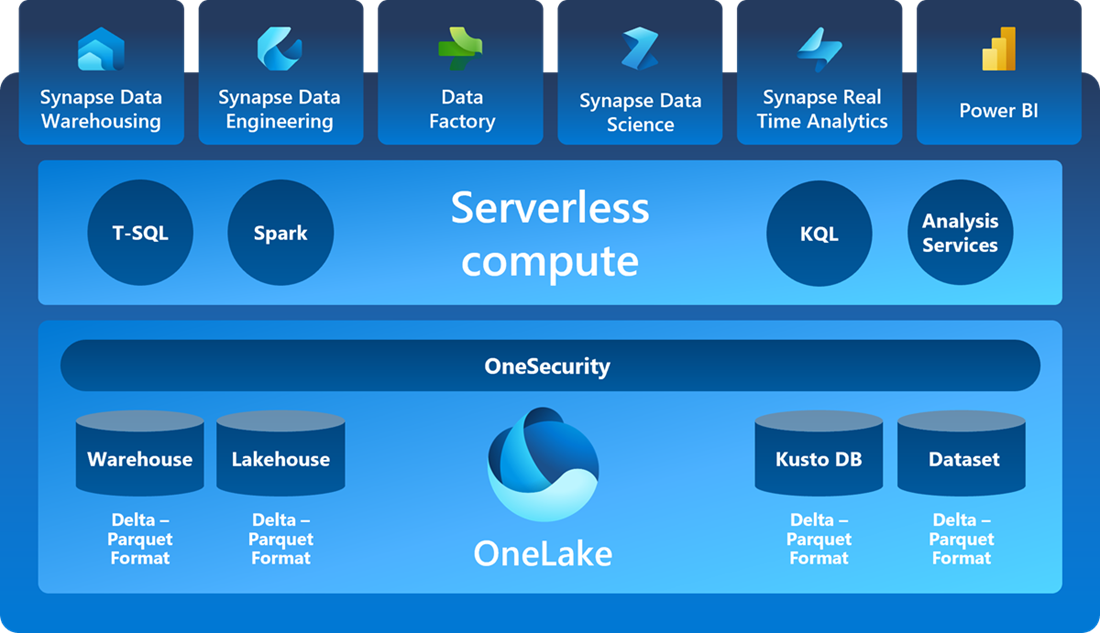

The Fabric Analytics Engineer Associate certification is intended to cover almost all generally available functionality of Fabric at the time of writing. That said, it’s not surprising that it covers lots of modules / collateral that exists in other data engineer and data analyst learning, and I expect the material in the exam will change as the product matures and additional functionality is added - this will also be interesting to review over time. It’s currently quite PowerBI focused, and I don’t expect that to change a great deal.

While it’s easy to naturally question where an “Analytics Engineer” sits in terms of role alignment given that data analyst and data engineer roles are more common in the industry than analytics engineers, I do feel that it covers enough topics across both areas to live up to the name. Any potential candidates will need to have in depth knowledge of SQL, Spark, and DAX, as well as developing Fabric analytics workloads and managing or administrating Fabric capacities. Some topics are covered with a purely Fabric lens (e.g. Version control, deployment best practices, and orchestration), which is totally reasonable, but it’s worth any potential candidates considering building considering undertaking broader foundational analytics engineering training that aren’t covered in the core DP-600 learning.

I think that any data practitioners interested in, or needing to prepare for, working with Fabric would benefit from undertaking the DP-600 learning and exam.

What is the Analytics Engineer Certification and Who is it Aimed at

Before getting in to details it’s worth mentioning that the Fabric Analytics Engineer Associate Certification follows a similar structure to other Azure certifications in that the name of the certification and name of the exam are different, but ultimately undertaking the DP-600 exam grants the certification. As such, I will use the two terms synonymously.

The exam details do a good job of describing the experience needed, and skills measured.

Experience Needed:

- Data modeling

- Data transformation

- Git-based source control

- Exploratory analytics

- Languages, including Structured Query Language (SQL), Data Analysis Expressions (DAX), and PySpark

Skills measured:

- Plan, implement, and manage a solution for data analytics (10–15%)

- Prepare and serve data (40–45%)

- Implement and manage semantic models (20–25%)

- Explore and analyze data (20–25%)

To anyone already operating in the domain, much of the skills and experience assessed won’t be surprising. I think much of the material will feel familiar to those working as data practitioners in Azure, especially PowerBI experts, but it’s relevant to consider that most candidates will feel stronger or more closely aligned to either the analytics or engineering topics. The interesting thing here is that I don’t think it’s immediately obvious as to whether a more analytics focused background or engineering focused background would be beneficial, but at first glance, I would suggest this reads as slightly more focused on analytics. I will add my views and explain specifics in later recommendations but, ultimately, I feel as though the exam is aimed at people who want to have a rounded view of managing Fabric capacities and building and deploying Fabric workloads, whatever their title may be.

Exam Preparation

For context, I took the DP-600 in early April 2024, and given it was only in beta in early 2024 there aren’t many high quality courses from reputable trainers. I used Microsoft Learn’s self-paced learning (available here) and practice assessment.

I’ve since seen a number of Reddit posts (r/MicrosoftFabric) and Youtube videos (from users such as Guy in a Cube) that look promising, and I’m sure popular trainers in the Azure space such as John Cavill and James Lee will have DP-600 training content in future.

There are a couple of additions worth pointing out here. Though I was confident in my SQL, PySpark, and PowerBI skills, I would recommend that if you’re new to the domain or feel room for improvement in one of those areas that it would be worthwhile considering resources such as Udemy or Coursera SQL courses, Cloudera or Databricks PySpark training, MSLearnPowerBI training, or your favourite training provider to close any gaps. It’s worth noting that the exam expects a level of SQL and PySpark knowledge beyond just what is covered in the MSLearn labs.

My Experience & Recommendations

Before sharing focus areas for learning, and key topics from the exam, there are a few mechanical pieces to call out:

- Do the practice assessment Microsoft offer (on the Dp-600 page linked above) - none of the questions here came up not he exam, but I felt like they were exactly the right level of difficulty and with a good speed of topic areas to assess readiness prior to booking the exam. I did experience the webpage crashing, and not remembering my progress (I’m assuming it’s a randomised question set / structure) so I would suggest doing this in one sitting

- Do all the labs / hands on demos - it’s very easy to want to move past these as much of the text will be a repeat of the theoretical material covered during the learning modules, but there really is no substitute for hands on experience. I also would suggest that the only topics I struggle with in the practice assessment were ones that I had not done the lab for

- Get used to navigating MSLearn - you can open an MSLearn window during the exam. It’s not quite “open book” and it’s definitely trickier to navigate MSLearn using only the search bar rather than search engine optimised results, but effectively navigating MSLearn means not always needing to remember the finest intricate details. That said, it is time consuming, so I aimed to use it sparingly and only when I knew where I could find the answer quickly during the exam. Note that this won’t help for most PySpark or SQL syntax / code questions

- Though it’s not a pre-requisite, I would firmly recommend undertaking the PL-300(PowerBI Data Analyst Associate) exam before DP-600. So much of the DP-600 learning is based on understanding DAX, PowerBI semantic modelling and best practices, and PowerBI external tools (Tabular Editor, DAX Studio, ALM). Those who have undertaken PL-300 will have a much easier time with the DP-600 exam.

As for topic areas covered during the exam:

- Intermediate to advanced SQL knowledge - most SQL questions rely on knowledge that isn’t covered via MSLearn beyond a few examples. These include understanding SQL joins, where, group by and having clauses, CTEs, partitioning (row number, rank, dense rank), LEAD and LAG, and date/time functions

- PowerBI - in addition to the above pieces noted in relation to PL-300, I would also call out creating measures, using VAR and SWITCH, Loading data to PowerBI, using time intelligence functions, using data profiling tools in PowerBI, and using non-active relationships (functions such as USERELATIONSHIP). Managing datasets using XMLA endpoints and general model security are also key topic areas

- Beginner to intermediate PySpark (as well as Jupyter Notebooks and Delta Lake Tables) - in all honesty, I think the more hands on PySpark experience you have, the better. But, I was only specifically asked about thinks like the correct syntax for reading / writing data to tables, profiling dataframes, filtering and summarising dataframes, and utilising visualisation libraries (matplotlib, plotly)

- Fabric Shortcuts - I think this was relatively clear in the learning for basic examples, but it’s worth understanding how this would work in more complex scenarios such as multi-workspace or cross-account examples (same applies to Warehouses), and how shortcuts relate to the underlying data (e.g. what happens when a shortcut is deleted)

- Core data engineering concepts such as Change Data Capture (CDC), batch vs. Real-time / streaming data, query optimisation, data flows and orchestration, indexes, data security [Row Level Security (RLS) and Role-Based Access Control (RBAC)]

- Storage optimisation techniques such as Optimize and Vacuum

- Fabric licenses and capabilities (see here)

- Source control best practices for Fabric and PowerBI

- Knowledge of carrying out Fabric admin tasks - for example, understanding where capacity increased occur (Azure portal), where XMLA endpoints are disabled (Tenant settings), enabling high concurrency (Workspace settings)

What next?

I have no certifications planned for now, but I plan to build some thing around MS Purview, similar to what I did with AWS DataZone. I will plan over the next few months what the next certification step is, but given the recent additions to AI-102 (AI Engineer Associate) related to OpenAI, I will likely start there.