Microsoft Fabric Capacity Sizing & Estimation

Intro & Context

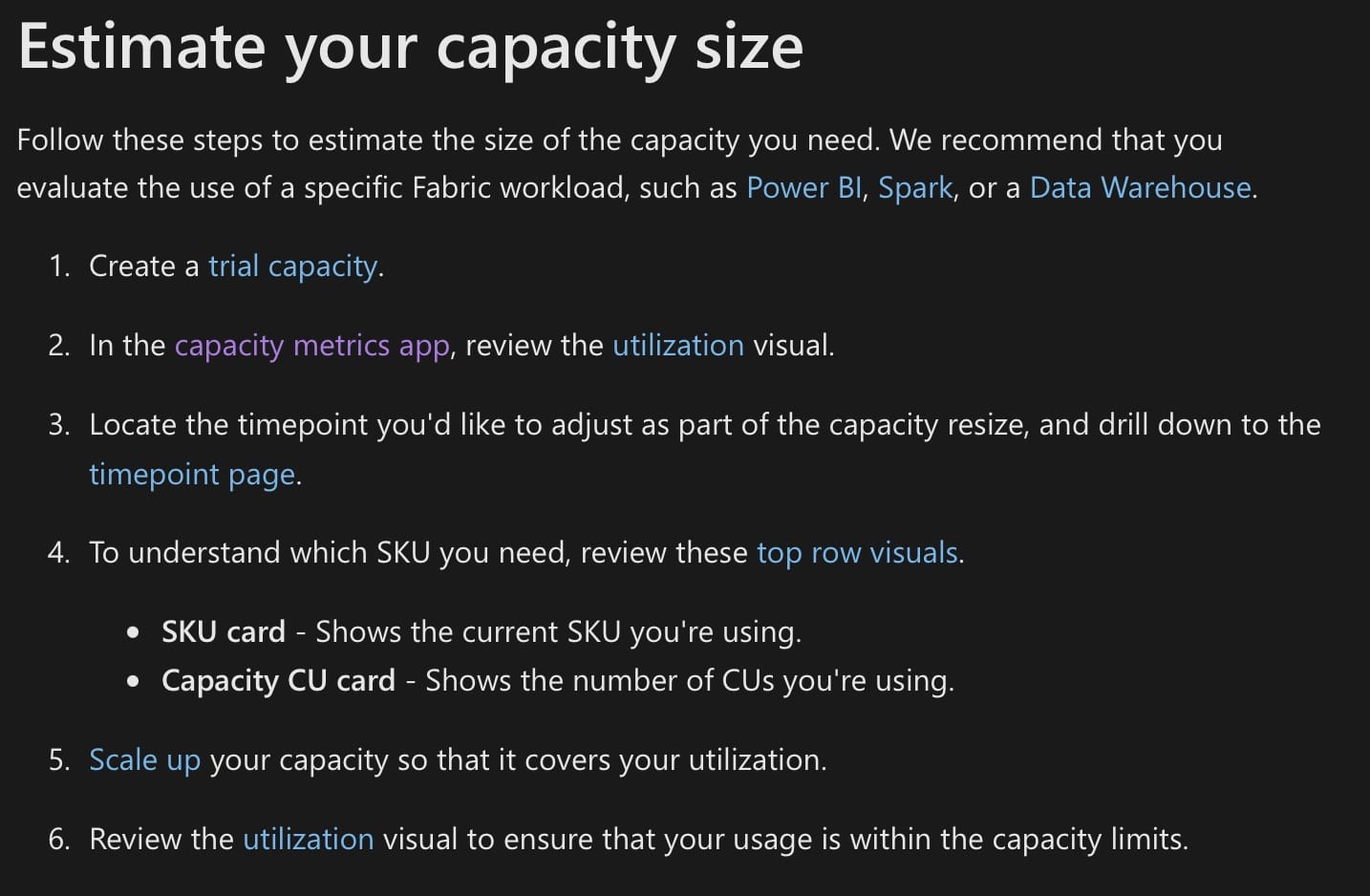

Until recently, the only easily accessible information around capacity planning in Microsoft Fabric was the pricing details outlined on the Microsoft website, but this has resulted in difficulty in anticipating the appropriate SKU requirements. The most common guidance I've seen around capacity planning for Microsoft Fabric is usually to make use of the 60-day free trial, run some realistic workloads, and monitor consumption through the Fabric Capacity Monitoring App (or Fabric Unified Admin Monitoring). In fact, as of the time of writing, this was the guidance provided:

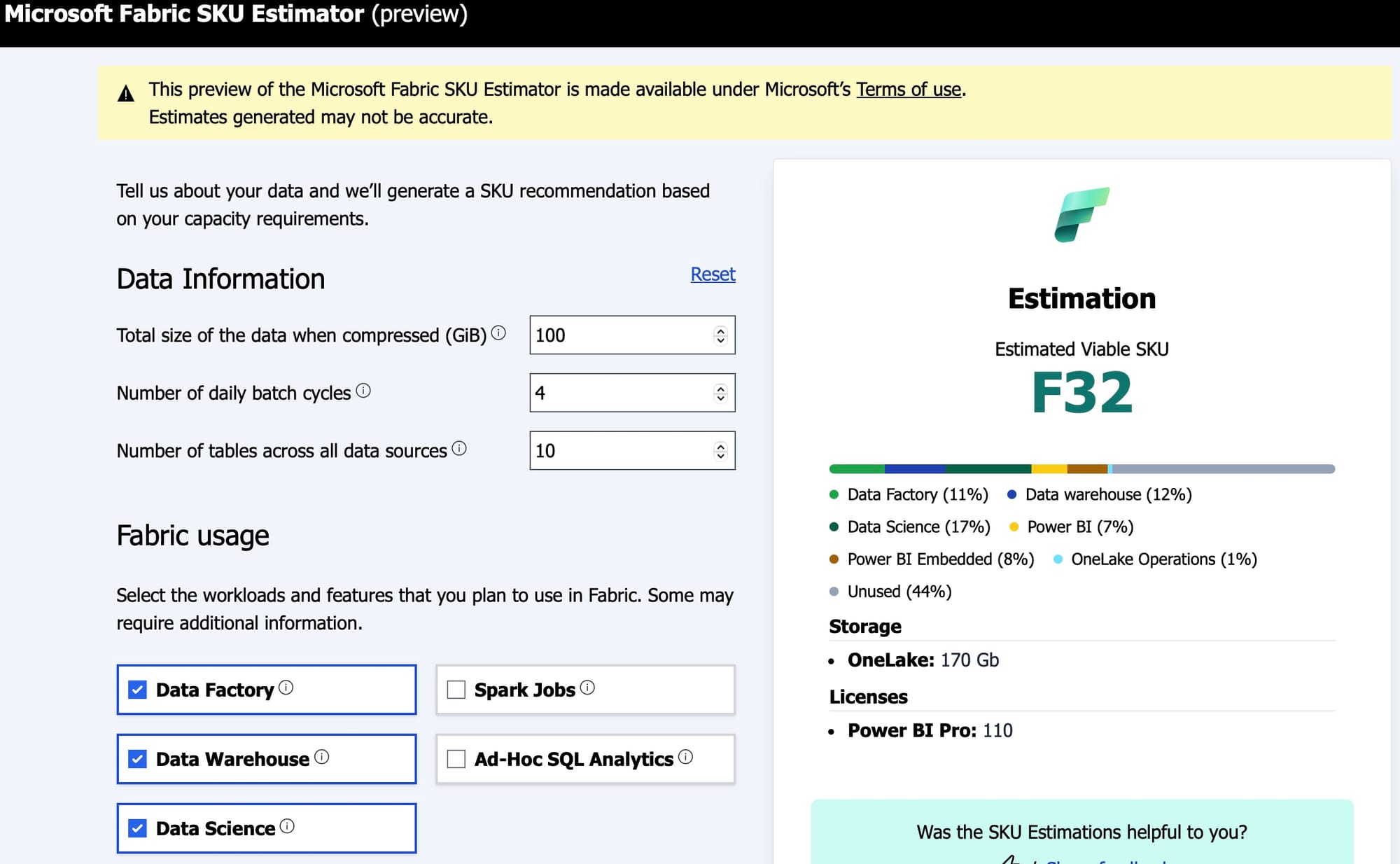

Earlier this month, Microsoft released the Fabric Capacity Estimator in public preview to support potential customers with this challenge.

My experience with the new Capacity Estimator

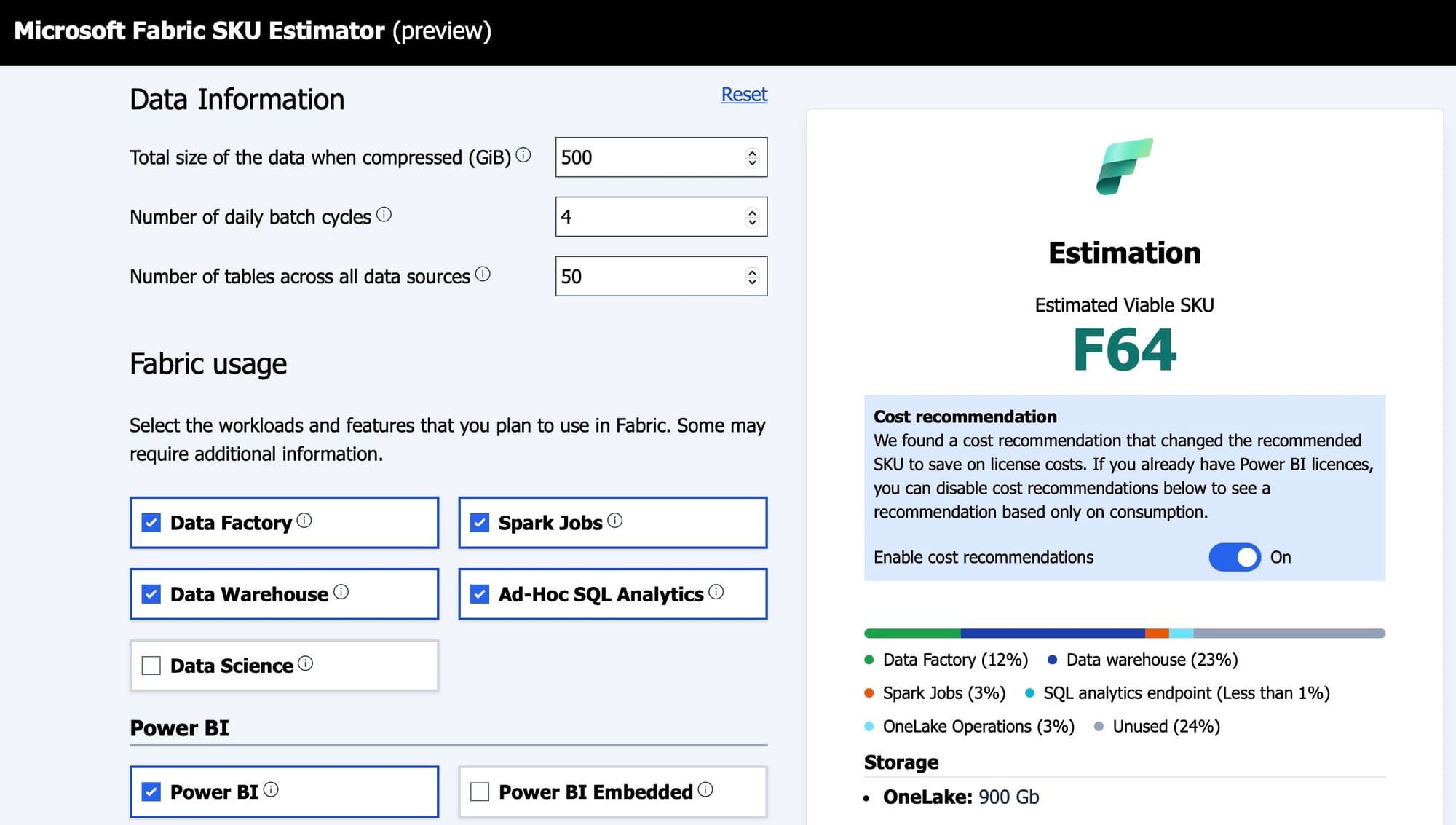

I was fortunate enough get access to the capacity estimator during private preview after attending FabCon Europe in September 2024 so I've been able to use it during implementation planning for a 2 new and 1 ongoing Fabric projects as well as a few checks against existing capacities. Not much has changed in the front end since the private preview - as far as I can tell, the only differences are a new (optional) "Power BI model size (Gb)" input and some "cost recommendations" that come up on occasion, but there would likely have been some tweaks to the backend calculations.

In my experience, the estimator has been reasonably accurate as long as some care is taken in the input estimation, so before sharing a few recommendations, it's worth saying that I think it's a good addition to the documentation and tooling Microsoft offer. That said, I believe the capacity estimator is most useful for those utilizing engineering tools including Dataflow Gen2 and Data Pipelines.

For those running pure pro-code experiences (mainly notebooks), I think it's slightly trickier as most consumption is based on the data warehouse and Power BI components, with Spark Jobs not being adjustable and no "number of hours notebook execution" input. I understand this would be a little trickier as it’s not typically one-size-fits-all i.e. depends not just on run time but cluster sizing and environment setup (e.g. native execution engine).

Based on what I've seen, I would suggest:

- Though all variables will of course have an effect on the output, I've found that more weight is applied to data factory (hours for daily Dataflow Gen2 ETL operations), data warehouse (data size), and data science (training jobs), so be as specific as possible with these inputs

- For the number of hours of Dataflow Gen2 ETL operations, consider this as the total number of active hours across all workloads but exclude parallelization (i.e. you might run 4 hours but it's two pipelines for 2 hours in parallel. Use the 4 number here)

- Data size is compressed. This is not an easy one size fits all, but I usually put around 60% of the overall data size when moving from SQL databases due to the parquet storage when writing to Fabric

- Consider Data Warehouse as "Data Analytics Store" - there isn't a data lakehouse option in the estimator

- When considering Total Cost of Ownership (TCO) calculations, the capacity estimator wouldn't help with licensing and data storage costs, so remember to factor these in. for licensing, be sure to remember that consumption for report viewers is included in F64 SKU costs. As a result, the total cost is

- C (cost) = W (SKU cost) + X (Power BI report consumer licensing) + Y (Power BI report developer licensing) + Z (storage cost)

- Depending on the number of report developers and viewers, it may be the case that the cost of running an F32 capacity is the same or more as running an F64, so calculate the numbers after you have a SKU estimation

- Consider if all your assets would need to be backed by a Fabric SKU. Often, there is a good option of mixing Fabric-backed workspaces and Power-BI pro backed workspaces which could reduce consumption needs

- If in doubt, lean towards the lower capacity. Aside from the above option in moving some assets to Power BI pro workspaces, or optimizing workloads for capacity unit consumption (e.g. notebooks rather than Dataflow Gen 2), it usually takes some time to migrate workloads to any new solution and you're unlikely to need the full capacity immediately. Additionally, I would anticipate that the cost-optimal solution for using Fabric in production is to reserve a capacity over 12 months, and unless you are sure you're going to need the larger capacity for at least 3 of those months, it makes more sense to reserve the lower capacity and increase (with pay as you go billing) if required

Any Additional Factors

A couple of things I haven't considered here are:

- AI functions like data agents for Fabric or Copilot. These also aren't factored into the capacity estimator, so please do due diligence on how consumption of these would impact your capacity estimation

- Autoscale billing for Spark (serverless). This will likely change some capacity planning exercises for spark workloads - it was only announced a few weeks before the capacity estimator was released and there will be plenty to learn in the near future, but I think it’s best to assume the capacity estimator is in place for standard capacity billing scenarios (for now)

- Some other features will change capacity consumption. In my mind, these are mostly to do with preview features, like user data functions, and monitoring. With preview features, it makes sense as adoption will vary and consumption might change, but it’s worth considering what this might mean on an individual basis. For monitoring, it’s also going to depend on your approach, but I would consider leaving a little headroom if you implement something like Fabric Unified Admin Monitoring (FUAM)