MS Fabric Copilot - Recommendations and Pricing Considerations

TLDR

Ultimately, Fabric Copilot looks to be a really simple way to integrate the use of OpenAI services into a developers workflow, with one single method of billing and usage monitoring through your Fabric SKU. There are some assumptions that will need to be made and tested against when it comes to accurately baselining cost, and certainly some considerations around highest value use cases, but the cost model is appealing and I consider Fabric Copilot worth using or at least trialling / assessing for your use cases, with appropriate considerations.

As with Github Copilot, Amazon CodeWhisperer, and other tools in this space, I think the focus should be on accelerating development and shifting focus of skilled developers to more complex or higher value tasks.

Context

In March, Ruixin Xu shared a community blog detailing a Fabric Copilot pricing example, building on the February announcement of Fabric Copilot pricing. It’s exceptionally useful, and details how Fabric Copilot works, the maths behind calculating consumption through an end-to-end example. I’m aiming to minimise any regurgitation here, but I’m keen to add my view on key considerations for rolling out the use of Fabric copilot. For the purpose of this blog, I will focus on cost and value considerations, with any views on technical application and accuracy to be compiled in a follow up blog.

Enabling Fabric Copilot

First, it’s worth sharing some “mechanical” considerations:

- Copilot in Fabric is limited to F64 or higher capacities. These start at $11.52/hour or $8,409.60/month, excluding storage cost in OneLake and pay-as-you-go

- Copilot needs to be enabled at the tenant level

- AI services in Fabric are in preview currently

- If your tenant or capacity is outside the US or France, Copilot is disabled by default. From MS docs - “Azure OpenAI Service is powered by large language models that are currently only deployed to US datacenters (East US, East US2, South Central US, and West US) and EU datacenter (France Central). If your data is outside the US or EU, the feature is disabled by default unless your tenant admin enables Data sent to Azure OpenAI can be processed outside your capacity's geographic region, compliance boundary, or national cloud instance tenant setting”

Recommendations

- My first recommendation is to “give it a go” yourself. Based on how Fabric Copilot pricing works at the time of writing, I can see clear value in developers utilising Fabric Copilot - even for a simple example that optimistically might only take 30 minutes of development, a $0.63 cost feels pretty hard to beat. It’s hard to imagine Fabric Copilot costing more than a developer’s time.

- Consider who actually needs or benefits from access to Copilot. From what I’ve seen so far, the primary use case is around accelerating development for analysts and engineers, so those consuming reports might see much less value in comparison. Personally, I would also recommend any outputs are appropriately tested and reviewed by someone with the capability of building or developing without Copilot (for now, at least).

- Unsurprisingly, it feels as though Copilot could really accelerate analytics and engineering development, but I think it’s crucial that organisations considering adoption consider rolling out the use of copilot in stages, starting with small user groups. This is for two reasons; both to support the next recommendation on my list, but also as it will help in managing and monitoring resources at a smaller scale before considering impact on your capacity.

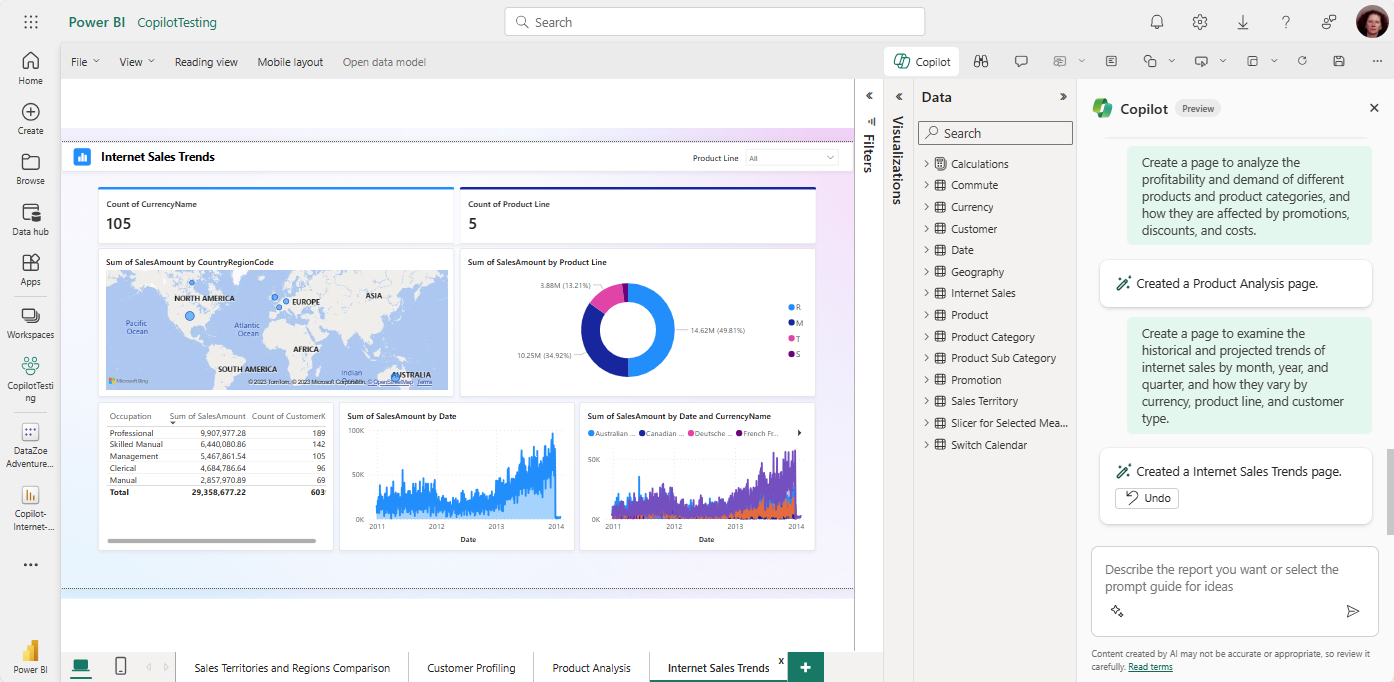

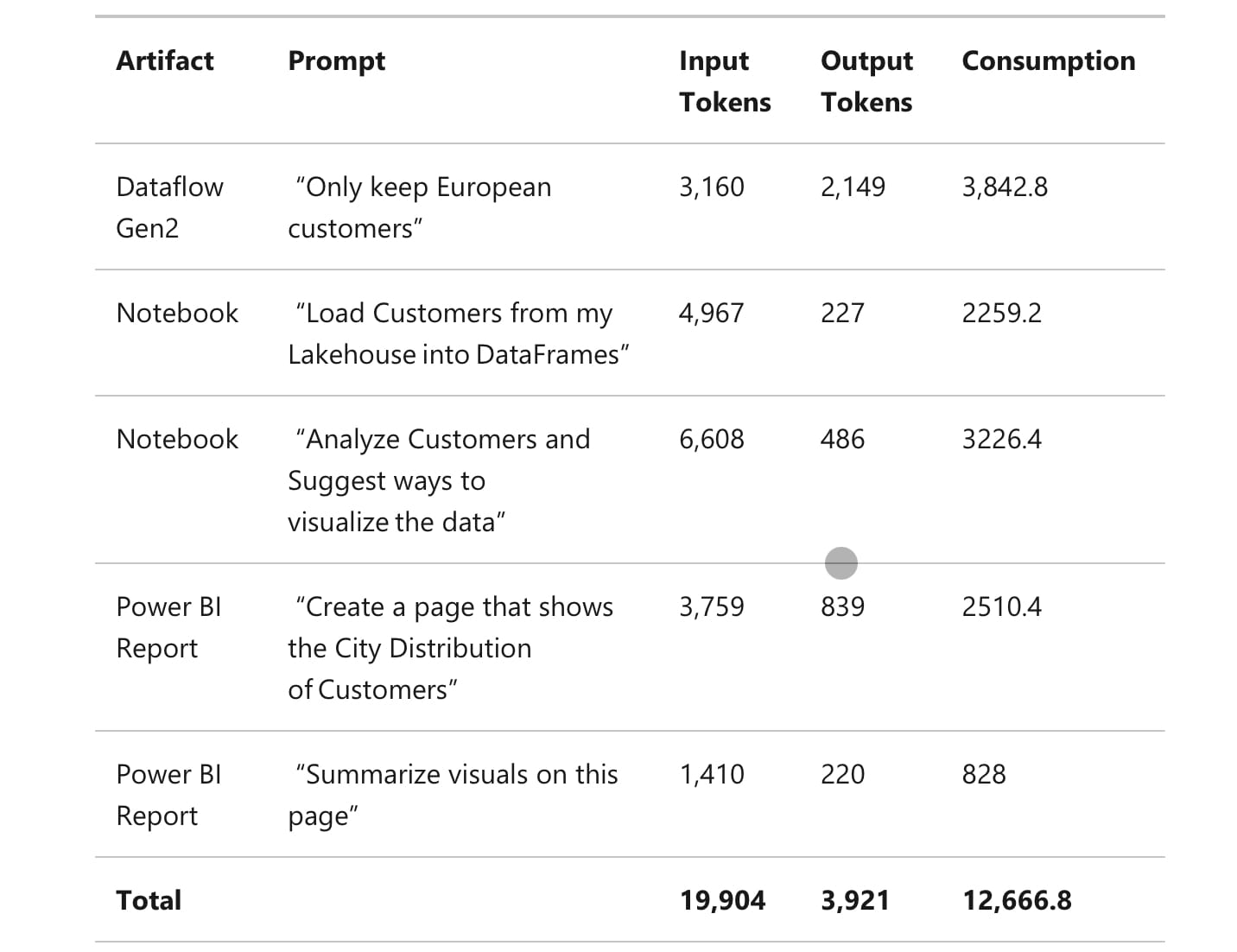

- I think it will be important to build out your internal best practice guidance / knowledge base. For example, in Ruixin’s blog, the CU consumption for creating a dataflow to keep European customers was about 50% more than what was required for creating a PowerBI page around customer distribution by geography. In my opinion, the benefit in terms of time saved is larger in the PowerBI use case than a simple dataflow column filter. In this example, I would also suggest that it’s best practice to use Copilot to generate a starting point for PowerBI reports, rather than something ready for publication / consumption. As is the case in many applications of Generative AI, there’s likely additional value in standardising Fabric Copilot inputs in terms of more consistent costs, so developing a prompt library could be useful.

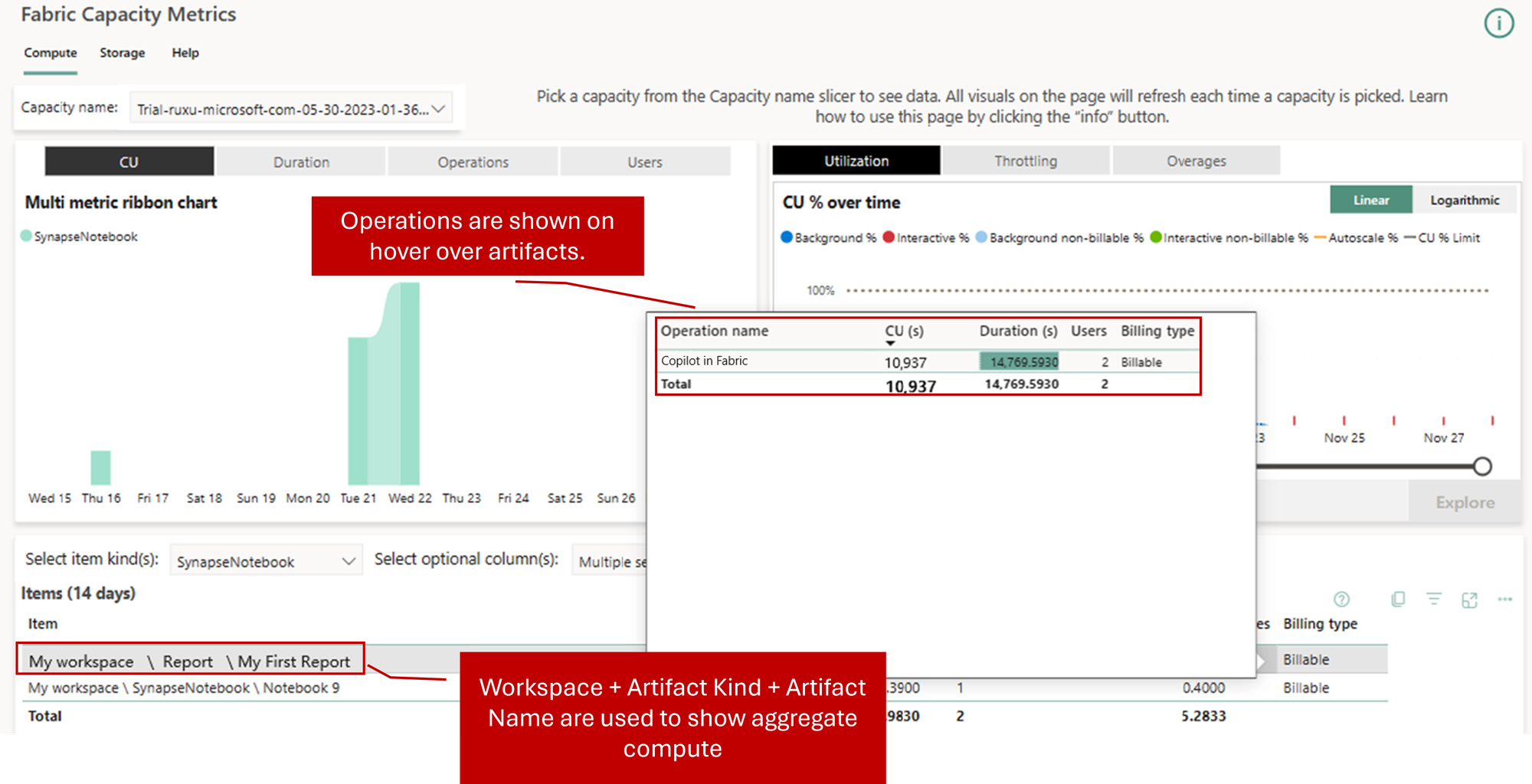

- You need to plan for expected CU consumption based on users and capacity SKU. Admittedly this seems obvious and is likely only a potential issue at a scale where multiple people are utilising Fabric Copilot all at once against the same capacity. For context, although organisations with more than 20 developers may be on a larger capacity than F64, a team of 20 developers submitting Fabric Copilot queries of a similar cost to those detailed in the blog (copied below) would be more than 100% (20*12,666.8 = 253,336) of available F64 CU capacity (64 * 3600 = 230,400) in a given hour. Admittedly, this needs considered over a 24-hour period, and it’s unlikely that parallel requests will be submitted outside standard working hours, but it should be evaluated in alongside other processes such as pipelines and data refreshes.

- Though I believe much of the Microsoft guidance around data modelling for using copilot is generally considered good practice for PowerBI modelling, I would recommend assessing your data model and adapting in line with the Microsoft guidance in order to maximise effectiveness of utilising Fabric (or PowerBI) Copilot.